Abstract

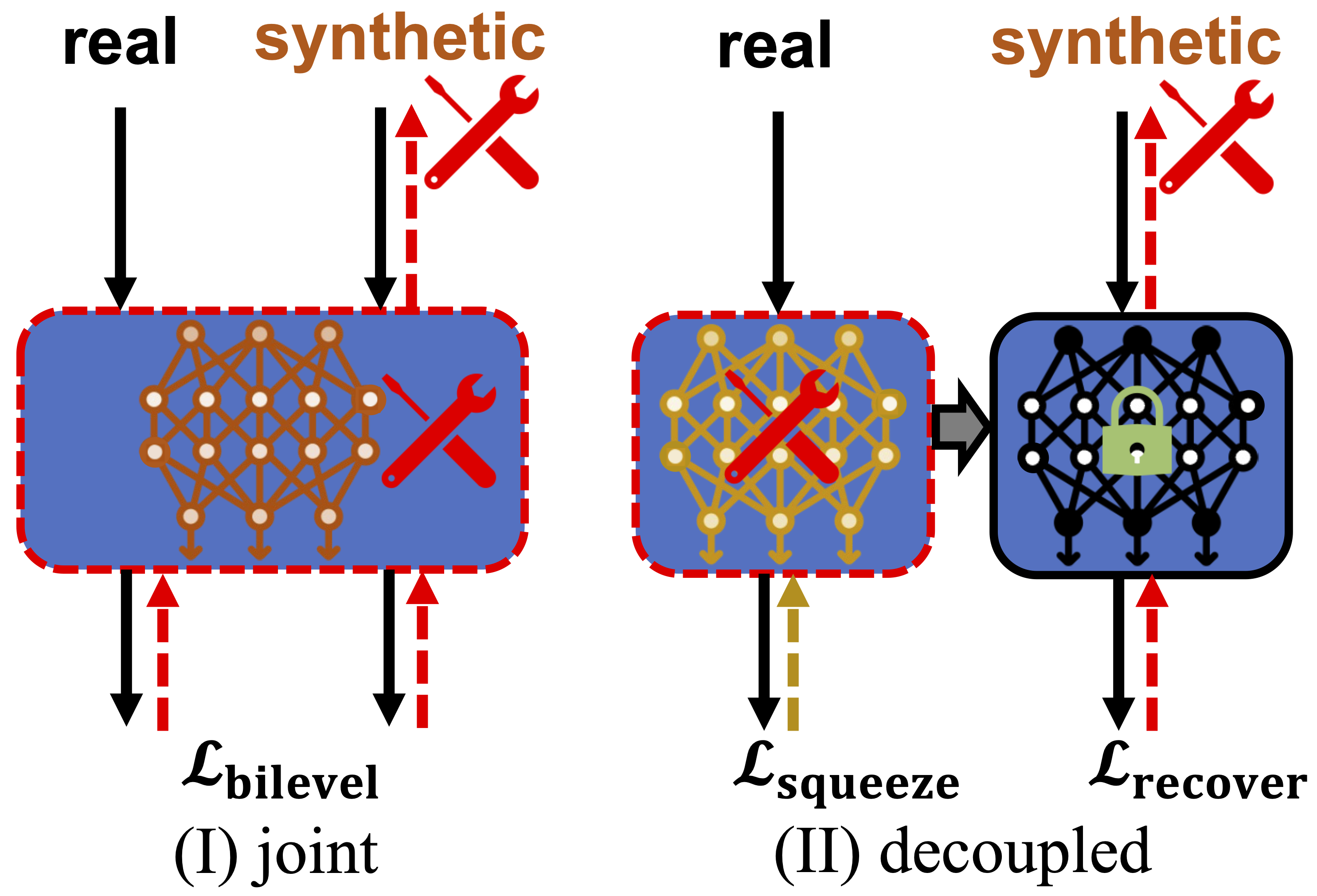

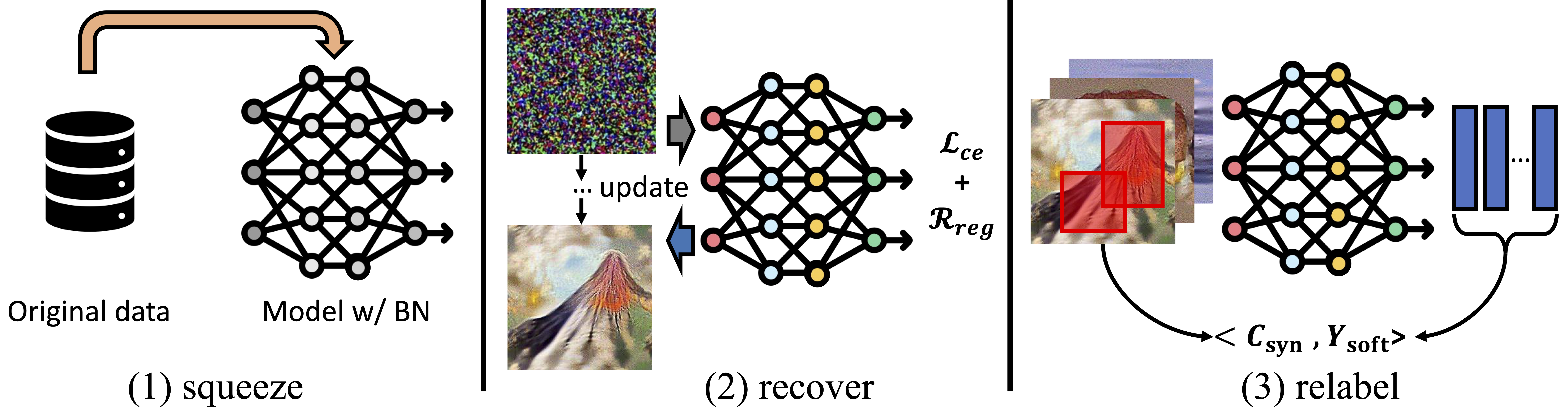

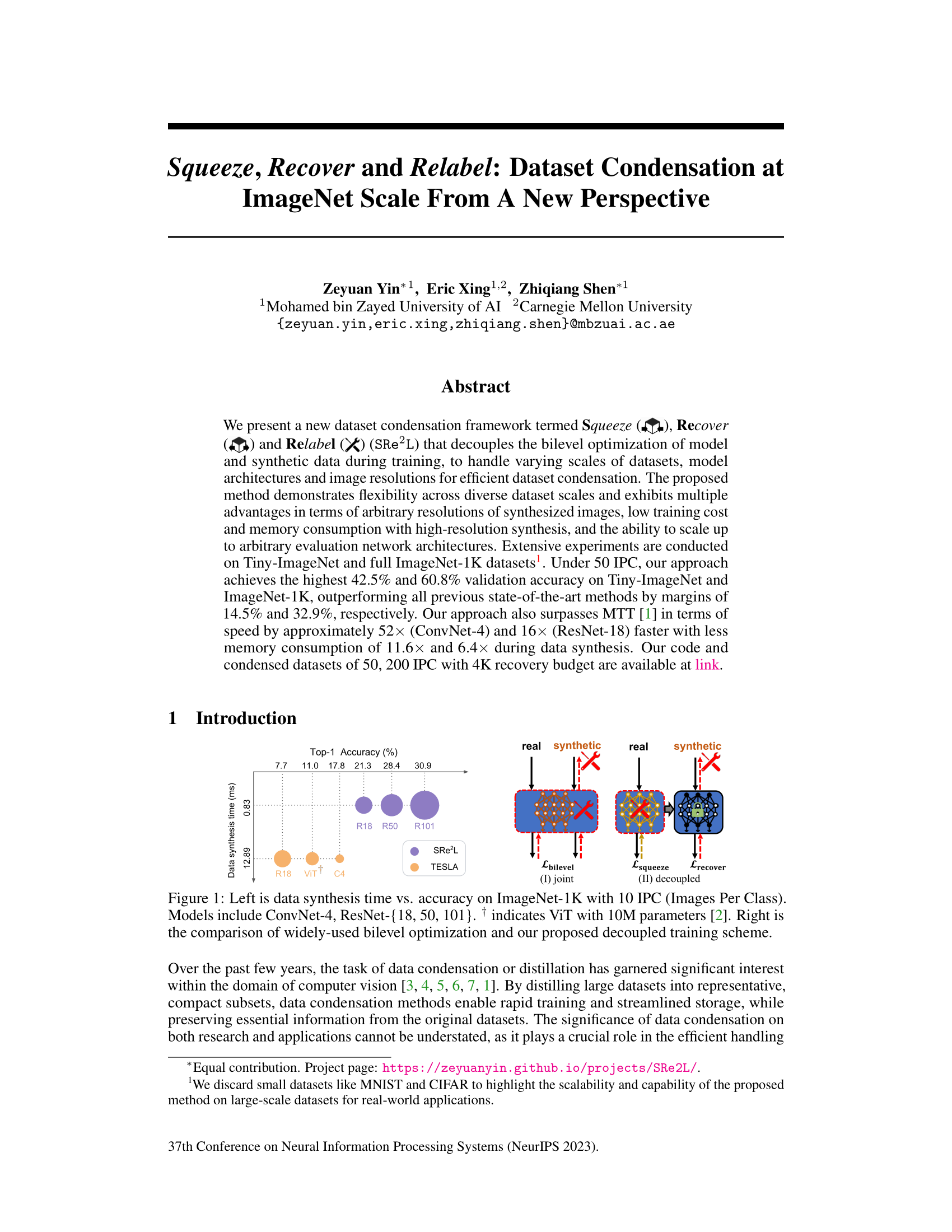

We present a new dataset condensation framework termed Squeeze ( ), Recover (

), Recover ( ) and Relabel (

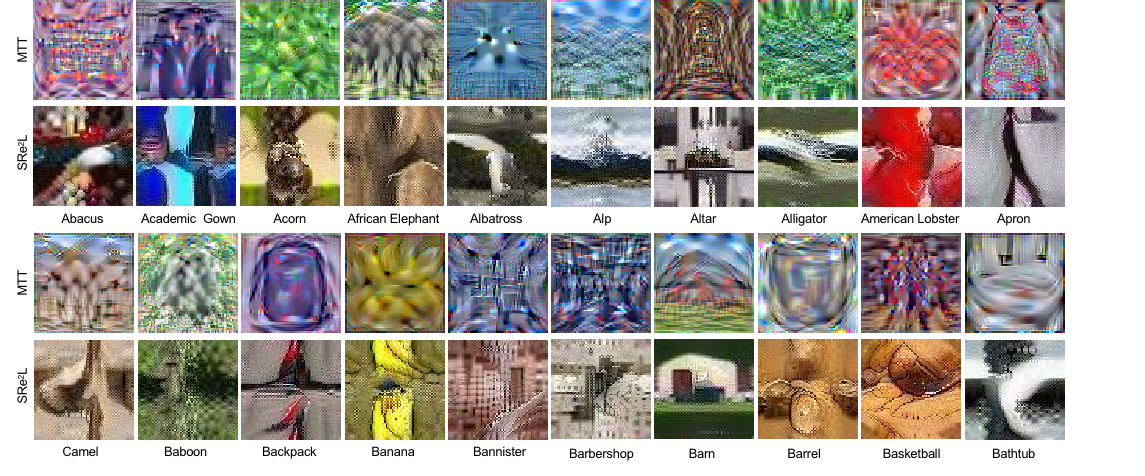

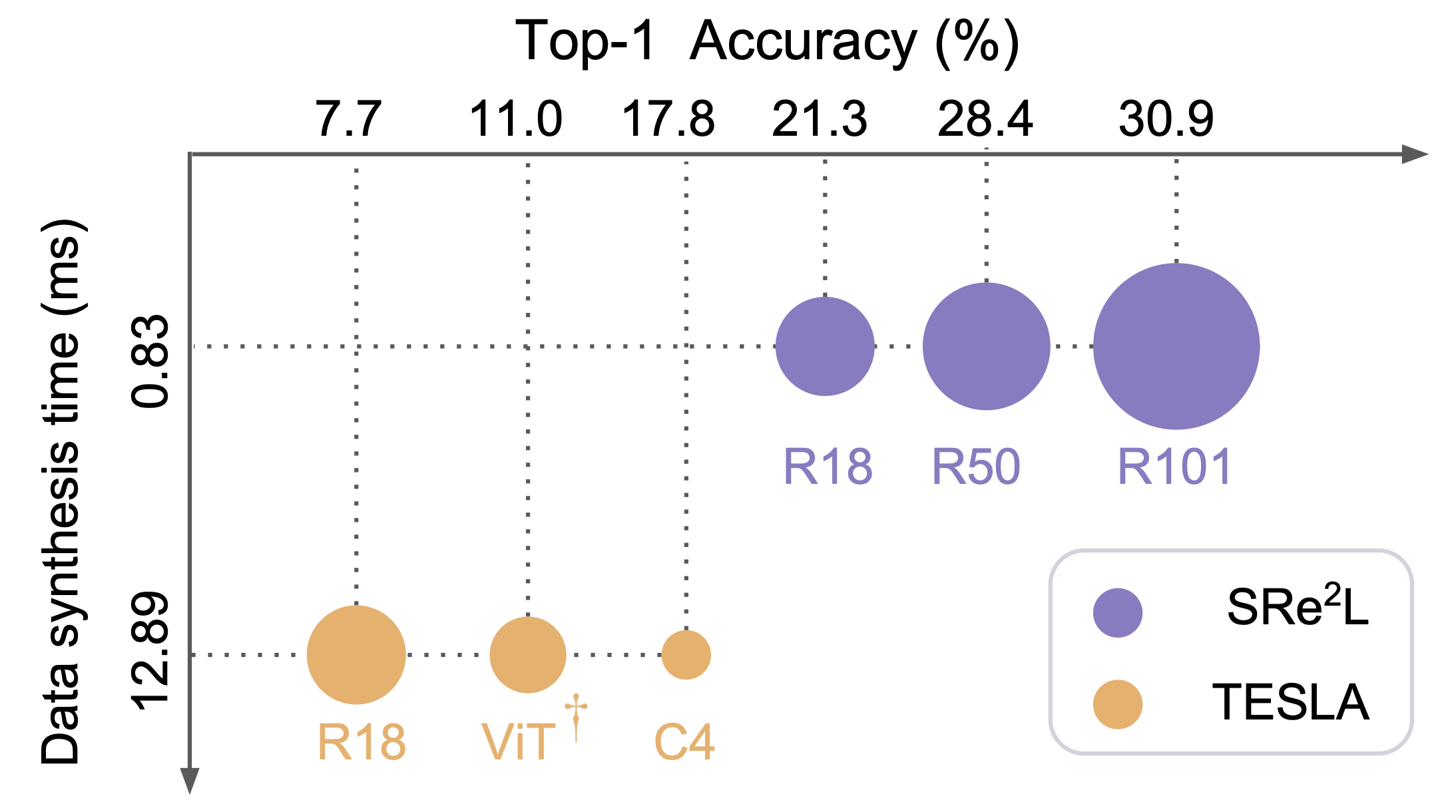

) and Relabel ( ) (SRe2L) that decouples the bilevel optimization of model and synthetic data during training, to handle varying scales of datasets, model architectures and image resolutions for effective dataset condensation. The proposed method demonstrates flexibility across diverse dataset scales and exhibits multiple advantages in terms of arbitrary resolutions of synthesized images, low training cost and memory consumption with high-resolution training, and the ability to scale up to arbitrary evaluation network architectures. Extensive experiments are conducted on Tiny-ImageNet and full ImageNet-1K datasets. Under 50 IPC, our approach achieves the highest 42.5% and 60.8% validation accuracy on Tiny-ImageNet and ImageNet-1K, outperforming all previous state-of-the-art methods by margins of 14.5% and 32.9%, respectively. Our approach also outperforms MTT by approximately 52× (ConvNet-4) and 16× (ResNet-18) faster in speed with less memory consumption of 11.6× and 6.4× during data synthesis.

) (SRe2L) that decouples the bilevel optimization of model and synthetic data during training, to handle varying scales of datasets, model architectures and image resolutions for effective dataset condensation. The proposed method demonstrates flexibility across diverse dataset scales and exhibits multiple advantages in terms of arbitrary resolutions of synthesized images, low training cost and memory consumption with high-resolution training, and the ability to scale up to arbitrary evaluation network architectures. Extensive experiments are conducted on Tiny-ImageNet and full ImageNet-1K datasets. Under 50 IPC, our approach achieves the highest 42.5% and 60.8% validation accuracy on Tiny-ImageNet and ImageNet-1K, outperforming all previous state-of-the-art methods by margins of 14.5% and 32.9%, respectively. Our approach also outperforms MTT by approximately 52× (ConvNet-4) and 16× (ResNet-18) faster in speed with less memory consumption of 11.6× and 6.4× during data synthesis.

Distillation Animation

Distilled ImageNet

Download

Distilled Data

- ⇒ImageNet-Iteration2K-IPC50 [718MB]

- ⇒ImageNet-Iteration4K-IPC50 [659MB]

- ⇒ImageNet-Iteration4K-IPC200 [2.58GB]

- ⇒Tiny-ImageNet-Iteration1K-IPC50 [20.1MB]

- ⇒Tiny-ImageNet-Iteration4K-IPC100 [40.7MB]

Soft Labels

Mixup augmentation:

CutMix augmentation:

Paper

Z. Yin and E. Xing and Z. Shen.

Z. Yin and E. Xing and Z. Shen. Squeeze, Recover and Relabel: Dataset Condensation

at ImageNet Scale From A New Perspective

NeurIPS 2023 spotlight

@inproceedings{yin2023squeeze,

title={Squeeze, Recover and Relabel: Dataset Condensation at ImageNet Scale From A New Perspective},

author={Yin, Zeyuan and Xing, Eric and Shen, Zhiqiang},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023},

}